Querying with dbt from an external source in Snowflake

- How-Tos FAQs

- March 16, 2021

Native Tables vs External Tables in Snowflake

Let’s explore querying with dbt from an external source in Snowflake. It is very common to have data stored in public cloud storage such as Amazon S3, Google Cloud Storage or Microsoft Azure that needs to be incorporated in a business data model. There are two approaches to integrating external cloud storage data in a modern data warehouse:

- COPY INTO

- Pros: Faster Query Performance, Less Expensive to Query

- Cons: Additional Data Storage Cost, More Expensive to Modify

- External Tables

- Pros: No Additional Data Storage Cost, Less Expensive to Modify

- Cons: Slightly Slower Query Performance, More Expensive to Query

Based on the pros and cons, there is no hard and fast rule as to the right approach. The decision to use one over the other should be based on an evaluation of how the tradeoffs for each approach impact the overall solution versus requirements.

COPY INTO Approach

When loading data into a data warehouse like Snowflake, that data is being copied to a Snowflake managed storage bucket in the same cloud as your Snowflake instance and Snowflake passes off the cost to the customer. This means that when you store data in both cloud storage and your data warehouse, you are paying to store the same data twice.

In addition, if you want to modify the definition of the table, that could require that you truncate the table and reload all the historical data, which uses compute resources, and could be costly depending on the size of your data and complexity of data transformation, if any, within the load process. There are multiple ways to load data into Snowflake, but this article will focus on the other approach: using an external table.

Snowflake external tables

Snowflake external tables are essentially just metadata stored in Snowflake. This metadata defines the location, the file format, and any light data transformation processing steps, if any, that Snowflake should use to access and query external storage like Amazon S3.

In the previous approach, we define a table and create that table, then we physically move the data from the external storage to Snowflake storage and backfill the table we created with the data we moved into Snowflake. In this approach, we just store the definition for a table, which is then used as an interface between Snowflake and external data, so the data is never actually moved or loaded. Snowflake queries by reading data from the external storage location at the time of query execution. This is called “schema-on-read”, because there are no schema objects (tables) being created in Snowflake until we query the data.

Using Snowflake external tables as sources in DBT

To use an external table as a source in DBT, most of the workflow is in Snowflake. For this demonstration, sample order data was pre-loaded into an Amazon S3 bucket and appropriate permissions have been configured for Snowflake to access this bucket. The sample data was unloaded from Snowflake’s sample data table snowflake_sample_data.tpch_sf1.orders orders in standard CSV format and then uploaded into Amazon S3.

Create an external stage

Snowflake needs access to the Amazon S3 bucket. Create an external stage with the location and any credentials needed to access it.

create or replace stage s3_temp

url='s3://[bucketname]'

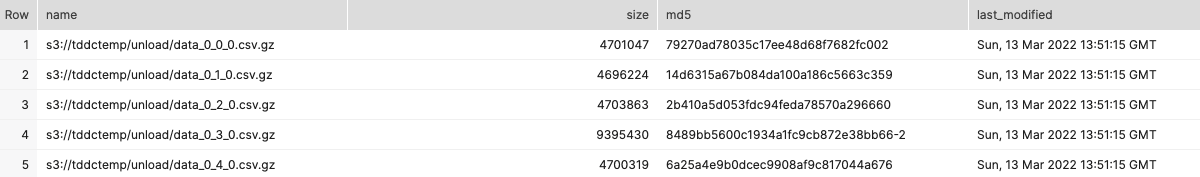

credentials=(aws_key_id='[aws_key]' aws_secret_key='[aws_secret_key]');Use list to list all the files in the external stage. Notice there are multiple files and they are compressed. We did not have to specify the file type because the files are in the default Snowflake format (gzip compressed, comma separated CSV).

list @s3_temp; The external stage can be queried directly, using column numbers.

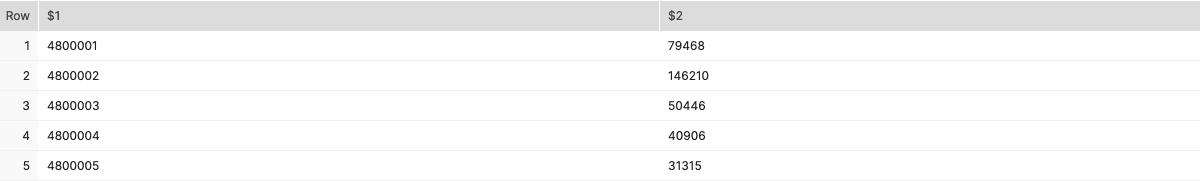

The external stage can be queried directly, using column numbers.

select $1, $2 from @s3_temp;

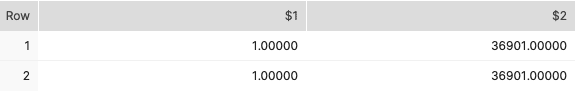

A couple of quick checks show that the stage data matches the data that was originally unloaded from Snowflake sample data.

(select $1, $2 from @s3_temp order by $1 limit 1)

union all

(select $1, $2 from snowflake_sample_data.tpch_sf1.orders order by $1 limit 1);Create an external table

Create an external table that references the external stage. A VALUE column is always returned for external tables; we do not have to specify this column in the external table definition. The VALUE has each line in your source file parsed into a variant column. The parsing to json is based on the file_format parameter. A metadata field, metadata$filename , is also available for external tables, with the full file path.

create or replace external table

s3_orders(

filename varchar as metadata$filename

)

location = @temp.public.s3_temp

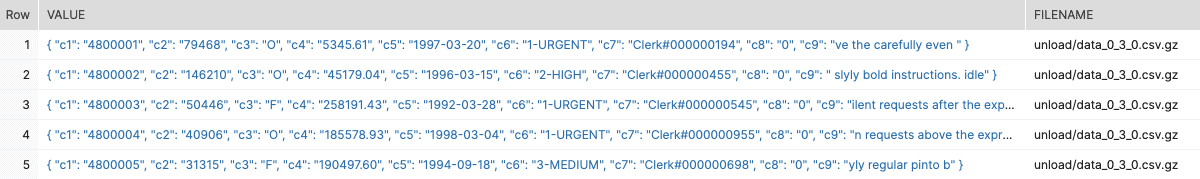

file_format = (type = 'CSV');Take a look at the external table output to see how the value field is formatted and how many columns there are.

select * from s3_orders;

We can create individual columns from the VALUE variant field using parse_json() .

create or replace external table

s3_orders(

o_orderkey number as (parse_json(value):c1::number),

o_custkey number as (parse_json(value):c2::number),

filename varchar as metadata$filename

)

location = @temp.public.s3_temp

file_format = (type = 'CSV')

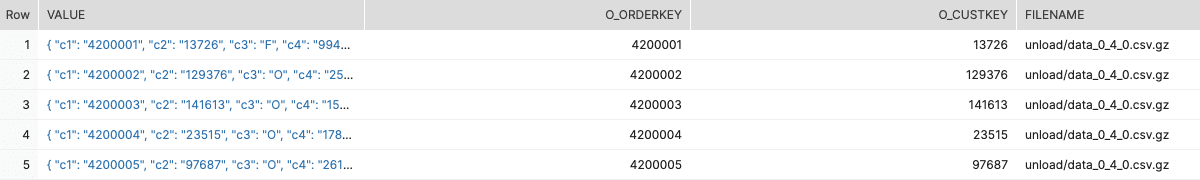

;Now o_orderkey and o_custkey are accessible as inividual fields.

select * from s3_orders;

Use the external table as a source in DBT

The external table we just created can be used in DBT just like any other table. The only problem we have now is that our external table metadata needs to be refreshed when new files are loaded into our external storage so that Snowflake knows they exist.

External tables can be set up to automatically refresh their metadata in Snowflake using notifications from the cloud provider in which your external storage is hosted. This method uses Snowflake to manage the external table.

Another option is to use the dbt-labs package dbt-external-tables , which has a macro to create and refresh external tables from dbt. Using this method, external tables are created and managed by DBT. The stage which the external table references, the database and schema for your external table, still need to be created in Snowflake.

So that’s how you query and use external sources in DBT models with Snowflake!

Continue reading

Check out: Loading Data into Snowflake with Efficiency