Tutorial06 - Building an Export Adaptor for Custom Connections

Introduction

Datameer provides a pluggable architecture to export workbook sheets into a custom connection.

Building an Export Adaptor

You can implement your own export job type and provide a dynamic wizard page and your own output adapter.

To export a workbook sheet into a custom connection you need a custom connection that supports exports. Datameer provides a prototype plug-in that supports a custom export.

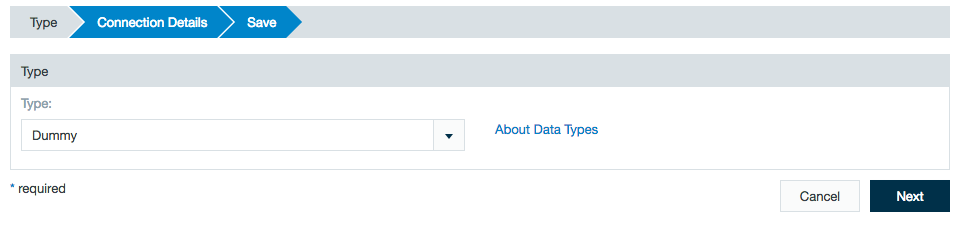

- Add a dummy connection. (This connection type provide a dummy import job type and a dummy export job type.)

- Trigger a workbook you want to export.

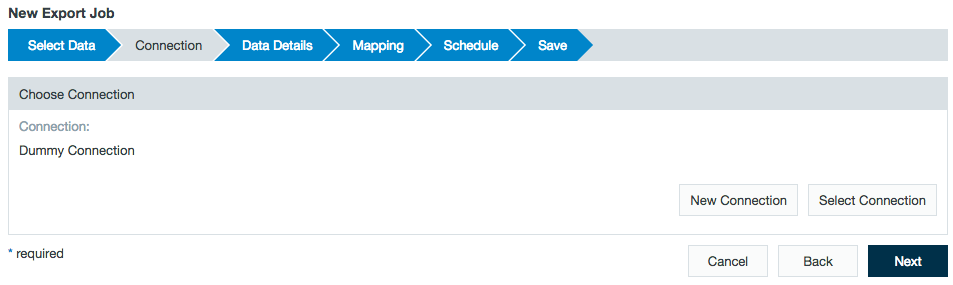

- Add a export job and select the dummy-data-store. This underlying export job prints the records to the console instead doing a real save.

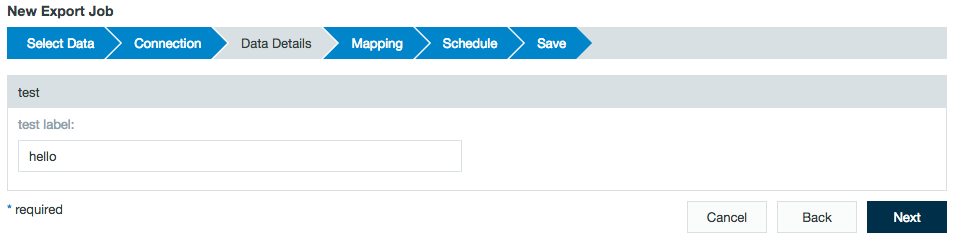

- The details page of this export job type should provide only a input text field with label test label with default value hello.

- When you trigger this export you should see that all records are printed to the console.

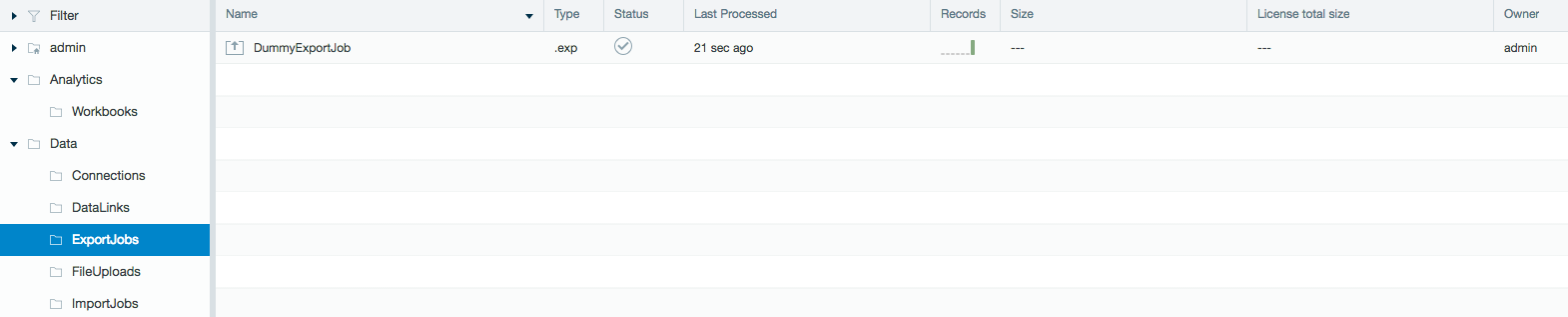

- Finally, you can run your new export and see the status of eradication.

Code Example Snippets for the Dummy Implementation

package datameer.das.plugin.tutorial06;

import datameer.dap.sdk.datastore.DataStoreModel;

import datameer.dap.sdk.datastore.DataStoreType;

import datameer.dap.sdk.entity.DataStore;

import datameer.dap.sdk.property.PropertyDefinition;

import datameer.dap.sdk.property.PropertyGroupDefinition;

import datameer.dap.sdk.property.PropertyType;

import datameer.dap.sdk.property.WizardPageDefinition;

public class DummyDataStoreType extends DataStoreType {

public final static String ID = "das.DummyDataStore";

public DummyDataStoreType() {

super(new DummyImportJobType(), new DummyExportJobType());

}

@Override

public DataStoreModel createModel(DataStore dataStore) {

return new DummyDataStoreModel(dataStore);

}

@Override

public String getId() {

return ID;

}

@Override

public String getName() {

return "Dummy";

}

@Override

public WizardPageDefinition createDetailsWizardPage() {

WizardPageDefinition page = new WizardPageDefinition("Details");

PropertyGroupDefinition group = page.addGroup("Dummy");

PropertyDefinition propertyDefinition = new PropertyDefinition("dummyKey", "Dummy", PropertyType.STRING);

propertyDefinition.setRequired(true);

propertyDefinition.setHelpText("Some Help Text");

group.addPropertyDefinition(propertyDefinition);

return page;

}

}

package datameer.das.plugin.tutorial06;

import java.io.IOException;

import java.io.Serializable;

import org.apache.hadoop.conf.Configuration;

import datameer.dap.sdk.common.DasContext;

import datameer.dap.sdk.common.Record;

import datameer.dap.sdk.entity.DataSinkConfiguration;

import datameer.dap.sdk.exportjob.ExportJobType;

import datameer.dap.sdk.exportjob.OutputAdapter;

import datameer.dap.sdk.property.PropertyDefinition;

import datameer.dap.sdk.property.PropertyGroupDefinition;

import datameer.dap.sdk.property.PropertyType;

import datameer.dap.sdk.property.WizardPageDefinition;

import datameer.dap.sdk.schema.RecordType;

import datameer.dap.sdk.util.ManifestMetaData;

public class DummyExportJobType implements ExportJobType, Serializable {

private static final long serialVersionUID = ManifestMetaData.SERIAL_VERSION_UID;

@SuppressWarnings("serial")

@Override

public OutputAdapter createModel(DasContext dasContext, DataSinkConfiguration configuration, RecordType fieldTypes) {

return new OutputAdapter() {

@Override

public void write(Record record) {

System.out.println(record);

}

@Override

public void initializeExport(Configuration hadoopConf) {

}

@Override

public void finalizeExport(Configuration hadoopConf, boolean success) throws IOException {

}

@Override

public void disconnectExportInstance(int adapterIndex) {

}

@Override

public void connectExportInstance(Configuration hadoopConf, int adapterIndex) {

}

@Override

public boolean canRunInParallel() {

return false;

}

@Override

public FieldTypeConversionStrategy getFieldTypeConversionStrategy() {

return FieldTypeConversionStrategy.SHEET_FIELD_TYPES;

}

};

}

@Override

public void populateWizardPage(WizardPageDefinition page) {

PropertyGroupDefinition addGroup = page.addGroup("test");

PropertyDefinition propertyDefinition = new PropertyDefinition("testkey", "test label", PropertyType.STRING, "hello");

addGroup.addPropertyDefinition(propertyDefinition);

}

@Override

public boolean isWritingToFileSystem() {

return false;

}

}

Life Cycles of Different Execution Frameworks

Each execution framework used for export has a different life cycle and execution order. The machine on which the method is executed is included in parenthesis. Conductor means the method is executed on the machine where the Datameer server is installed. Mapper/cluster means the method is executed within the YARN cluster on the mapper. After starting a export job in Datameer, the job is sent to the YARN cluster and is executed there.

For Spark, MapReduce, or Tez, the following methods are executed in this order:

#initializeExport (conductor) #connectExportInstance (mapper/cluster) #write (mapper/cluster) #disconnectExportInstance (mapper/cluster) #finalizeExport (conductor)

For SmallJob, the following methods are executed in this order:

#initializeExport (mapper/cluster) #connectExportInstance (mapper/cluster) #write (mapper/cluster) #disconnectExportInstance (mapper/cluster) #finalizeExport (mapper/cluster)

The life cycle methods are called at the following frequencies:

- Initialize/finalize is called once per job.

- Connect/disconnect is called once per task.

- Write is called once per record.

Source Code

This tutorial can by found in the Datameer plug-in SDK under plugin-tutorials/tutorial06.